It was a spectacularly beautiful Saturday in San Francisco. Exactly the perfect day to do some field usability testing. But this was no ordinary field usability test. Sure, there’d been plenty of planning and organizing ahead of time. And there would be data analysis afterward. What made this test different from most usability tests?

- 16 people gathered to make 6 research teams

- Most of the people on the teams had never met

- Some of the research teams had people who had never taken part in usability

testing before - The teams were going to intercept people on the street, at libraries, in farmers’

- markets

Ever heard of Improv Everywhere? This was the UX equivalent. Researchers just appeared out of the crowd to ask people to try out a couple of designs and then talk about their experiences. Most of the interactions with participants were about 20 minutes long. That’s it. But by the time the sun was over the yardarm (time for cocktails, that is), we had data on two designs from 40 participants. The day was amazingly energizing.

How the day worked

The timeline for the day looked something like this:

8:00

Coordinator checks all the packets of materials and supplies

10:00

Coordinator meets up with all the researchers for a briefing

10:30

Teams head to their assigned locations, discuss who should lead, take notes, and intercept

11:00

Most teams reach their locations, check in with contacts (if there are contacts), set up

11:15-ish

Intercept the first participants and start gathering data

Break when needed

14:00

Finish up collecting data, head back to the meeting spot

14:30

Teams start arriving at the meeting spot with data organized in packets

15:00-17:00

Everybody debriefs about their experiences, observations

17:00

Researchers head home, energized about what they’ve learned

Later

Researchers upload audio and video recordings to an online storage space

On average, teams came back with data from 6 or 7 participants. Not bad for a 3-hour stretch of doing sessions.

The role of the coordinator

I was excited about the possibilities, about getting a chance to work with some old friends, and to expose a whole bunch of people to a set of design problems they had not been aware of before. If you have thought about getting everyone on your team to do usability testing and user research, but have been afraid of what might happen if you’re not with them, conducting a study by flash mob will certainly test your resolve. It will be a

lesson in letting go.

There was no way I could join a team for this study. I was too busy coordinating. And I wanted to be available in case there was some kind of emergency. (In fact, one team left the briefing without copies of the thing they were testing. So I jumped in a car to deliver to them.)

Though you might think that the 3-or-so hours of data collection might be dull and boring for the coordinator, there were all kinds of things for me to do: resolve issues with locations, answer questions about possible participants, reconfigure teams when people had to leave early. Cell phones were probably the most important tool of the day.

I had to believe that the planning and organizing I had done up front would work for people who were not me. And I had to trust that all the wonderful people who showed up to be the flash mob were as keen on making this work as I was. (They were.)

Keys to making flash mob testing work

I am still astonished that a bunch of people would show up on a Saturday morning to conduct a usability study in the street without much preparation. If your team is half as excited about the designs you are working on as this team was, taking a field trip to do a flash mob usability test should be a great experience. That is the most important ingredient to making a flash mob test work: people to do research who are engaged with the project, and enthusiastic about getting feedback from users.

Contrary to what you might think, coordinating a “flash” test doesn’t happen out of thin air, or a bunch of friends declaring, “Let’s put on a show!” Here are 10 things that made the day work really well to give us quick and dirty data:

1. Organize up front

2. Streamline data collection

3. Test the data collection forms

4. Minimize scripting

5. Brief everyone on test goals, dos and don’ts

6. Practice intercepting

7. Do an inventory check before spreading out

8. Be flexible

9. Check in

10. Reconvene the same day

Organize up front

Starting about 3 or 4 weeks ahead of time, pick the research questions, put together what needs to be tested, create the necessary materials, choose a date and locations, and recruit researchers.

Introduce all the researchers ahead of time, by email. Make the materials available to everyone to review or at least peek at as soon as possible. Nudge everyone to look at the stuff ahead of time, just to prepare.

Put together everything you could possibly need on The Day in a kit. I used a small roll-aboard suitcase to hold everything. Here’s my list:

- Pens (lots of them)

- Clipboards, one for each team

- Flip cameras (people took them but did most of the recording on their phones)

- Scripts (half a page)

- Data collecting forms (the other half of the page)

- Printouts of the designs, or device-accessible prototypes to test

- Lists of names and phone numbers for researchers and me

- Lists of locations, including addresses, contact names, parking locations, and public transit routes

- Signs to post at locations about the study

- Masking tape

- Badges for each team member – either company IDs, or nice printed pages with the first names and “Researcher” printed large

- A large, empty envelope

About 10 days ahead, I chose a lead for each of the teams (these were all people who I knew were experienced user researchers) and talked with them. I put all the stuff listed above in a large, durable envelope with the team lead’s name on it.

Streamline data collection

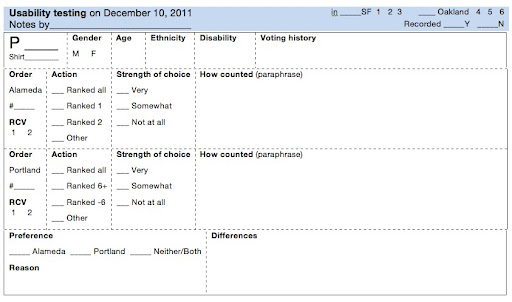

The sessions were going to be short, and the note-taking awkward because of doing this research in ad hoc places, so I wanted to make data collection as easy as possible. Working from a form I borrowed from Whitney Quesenbery, I made something that I hoped would be quick and easy to fill in and easy for me to understand what the data meant later.

|

| Data collector for our flash mob usability test |

The data collection form was the main thing I spent time on in the briefing before everyone went off to collect data. There are things I will emphasize more, next time, but overall, this worked pretty well. One note: It is quite difficult to collect qualitative data in the wild by writing things down. Better to audio record.

Test the data collection forms

While the form was reasonably successful, there were some parts of it that didn’t work that well. Though a version of the form had been used in other studies before, I didn’t ask enough questions about the success or failure of the open text (qualitative data) part of the form. I wanted that data desperately, but it came back pretty messy. Testing the data collection form with someone else would have told me what questions researchers would have about that (meta, no?), and I could have done something else. Next time.

Minimize scripting

Maximize participant time by dedicating as much time to the session as possible to their interacting with the design. That means that the moderator does nothing to introduce the session, instead relying on an informed consent form that one of the team members can administer to the next participant while the current one is finishing up.

The other tip here is to write out the exact wording for the session (with primary and follow up questions), and threaten the researchers with being flogged with a wet noodle if they don’t follow the script.

Brief everyone on test goals, dos and don’ts

All the researchers and I met up at 10am and had a stand-up meeting in which I thanked everyone profusely for joining me in the study. And then I talked about and took questions on:

- The main thing I wanted to get out of each session. (There was one key concept that we wanted to know whether people understood from the design.)

- How to use the data collection forms. (We walked through every field.)

- How to use the script. (“You must follow the script.”)

- How to intercept people, inviting them to participate. (More on this below.)

- Rules about recordings. (Only hands and voices, no faces.)

- When to check in with me. (When you arrive at your location; at the top of each hour, when you’re on the way back.)

- When and where to meet when they were done.

I also handed cash that the researchers could use for transit or parking or lunch, or just keep.

Practice intercepting people

Intercepting people to participate is the hardest part. You walk up to a stranger on the street asking them for a favor. This might not be bad in your town. But in San Francisco, there’s no shortage of competition. Homeless people, political parities registering voters, hucksters, buskers, and kids working for Greenpeace all wanting attention from passers-by. And there you are, trying to do a research study. So, how to get some attention without freaking people out? A few things that worked well:

- Put the youngest and/or best-looking person on the task.

- Smile and make eye contact.

- Using cute pets to attract people. Two researchers who own golden retrievers brought their lovely dogs with them, which was a nice icebreaker.

- Start off with what you’re not: “I’m not selling anything, and I don’t work for Greenpeace. I’m doing a research study.”

- Start by asking for what you want: “Would you have a few minutes to help us make ballots easier to use?”

- Take turns – it can be exhausting enduring rejection.

Do an inventory check before spreading out

Before the researchers went off to their assigned locations, I asked each team to check that they had everything they needed, which apparently was not thorough enough for one of my teams. Next time, I will ask each team to empty out the contents of the packet and check the contents. I’ll use the list of things I wanted to include in each team’s packet and my agenda items for the briefing to ask the teams to look for each item.

Be flexible

Even with lots of planning and organizing, things happen that you couldn’t have anticipated. Researchers don’t show up, or their schedules have shifted. Locations turn out to not be so perfect. Give teams permission to do whatever they think is the right thing to get the data – short of breaking the law.

Check in

Teams checked in when they found their location, between sessions, and when they were on their way back to the meeting spot. I wanted to know that they weren’t lost, that everything was okay, and that they were finding people to take part. Asking teams to check in also gave them permission to ask me questions or help them make decisions so they could get the best data, or tell me what they were doing that was different from the plan. Basically, it was one giant exercise in The Doctrine of No Surprise.

Reconvene the same day

I needed to get the data from the research teams at some point. Why not meet up again and share experiences? Turns out that the stories from each team were important to all the other teams, and extremely helpful to me. They talked about the participants they’d had and the issues participants ran into with the designs we were testing. They also talked about their experiences with testing this way, which they all seemed to love. Afterward, I got emails from at least half the group volunteering to do it again. They had all had an adventure, met a lot of new people, got some practice with skills, and helped the world be a become a better place through design.

Wilder than testing in the wild, but trust that it will work

On that Saturday in San Francisco the amazing happened: 16 people who were strangers to one another came together to learn from 40 users about how well a design worked for them. The researchers came out from behind their monitors and out of their labs to gather data in the wild. The planning and organizing that I did ahead of time let it feel like a flash mob event to the researchers, and it gave them room to improvise as long as they collected valid data. And it worked. (See the results.)

P.S. I did not originate this approach to usability testing. As far as I know, the first person to do it was Whitney Quesenbery in New York City in the autumn of 2010.

Sounds both brave and fun!

So did anyone take off their pants?

Hi Kathy,

It was super fun, and I was really pleased with the results. Not just the data, but how excited the team got about meeting users and interacting with them.

Dana

Hello Jeff,

No, no one took their pants off, but it happened to be the same day as Santa Con, which meant that there were at least 10,000 drunk people in santa outfits all over the city. Except for the naked santas who were trying to beat the world record for the largest number of naked santas in Washington Square at one time. How did you know if they were santas if they were naked, you ask? They kept their hats on.

Great adventure in the streets.

Dana