In the fall of 2012, I seized the opportunity to do some research I’ve wanted to do for a long time. Millions of users would be available and motivated to take part. But I needed to figure out how to do a very large study in a short time. By large, I’m talking about reviewing hundreds of websites. How could we make that happen within a couple of months?

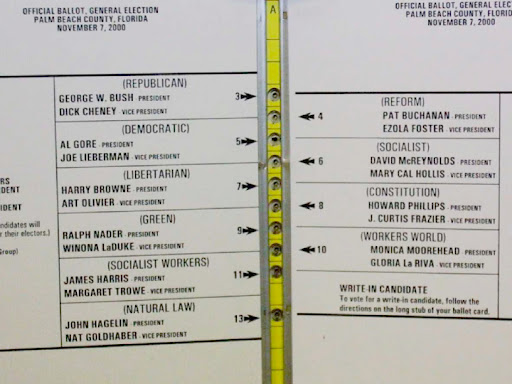

Do election officials and voters talk about elections the same way?

I had BIG questions. What were local governments offering on their websites, and how did they talk about it? And, what questions did voters have? Finally, if voters went to local government websites, were they able to find out what they needed to know? Continue reading “Crowd-sourced research: trusting a network of co-researchers”